This document describes the results of performance tests for WebSpellChecker Web API. The performance and load were tested on the following setup:

Our main goal was to observe the response time of text processing and CPU utilization on the server when 100 users send simultaneous requests on various languages to the server with WebSpellChecker v5.6.2.

We have run our tests continuously for each of the languages in the default language group (17 languages). The cache setting was enabled for all sets of tests. The following combinations of text to be checked are used for each language and for each user tier:

The measured were the response time and CPU utilization.

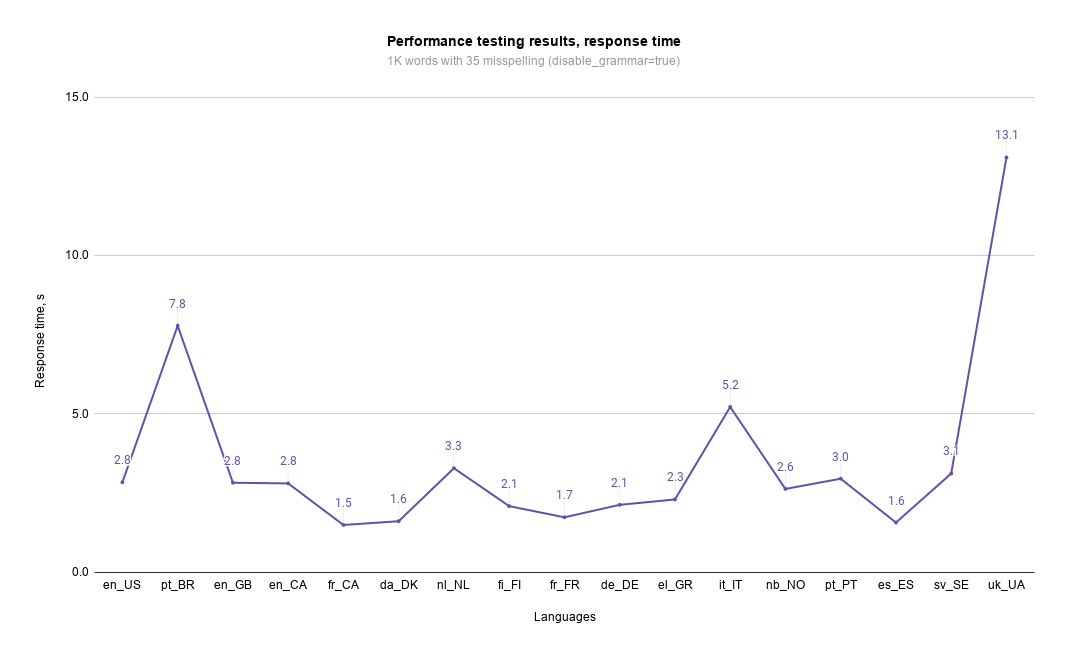

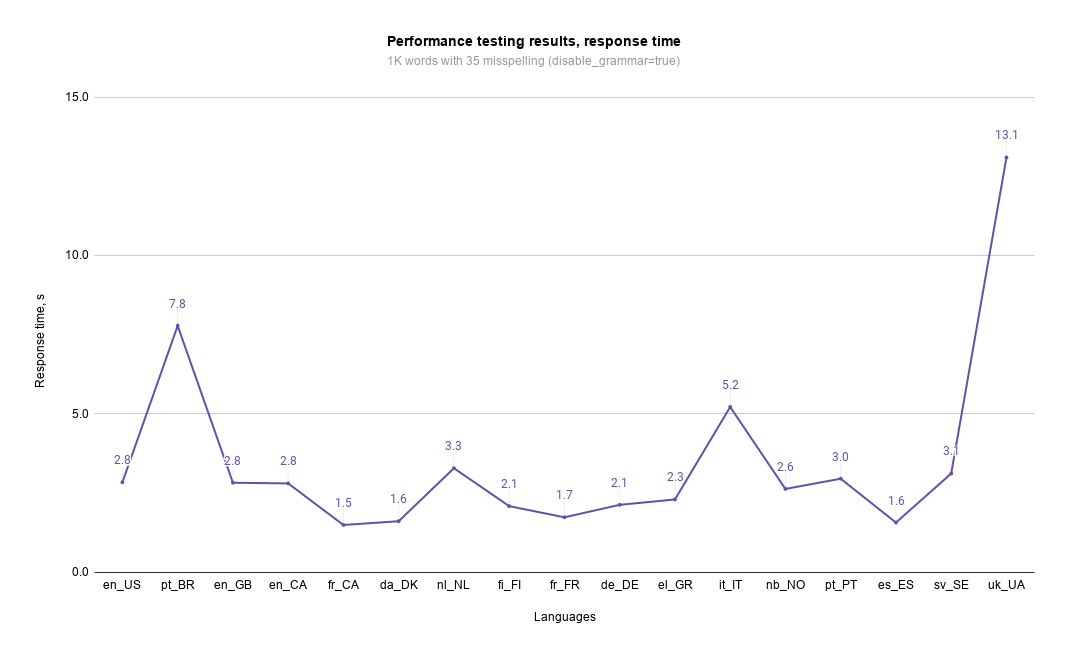

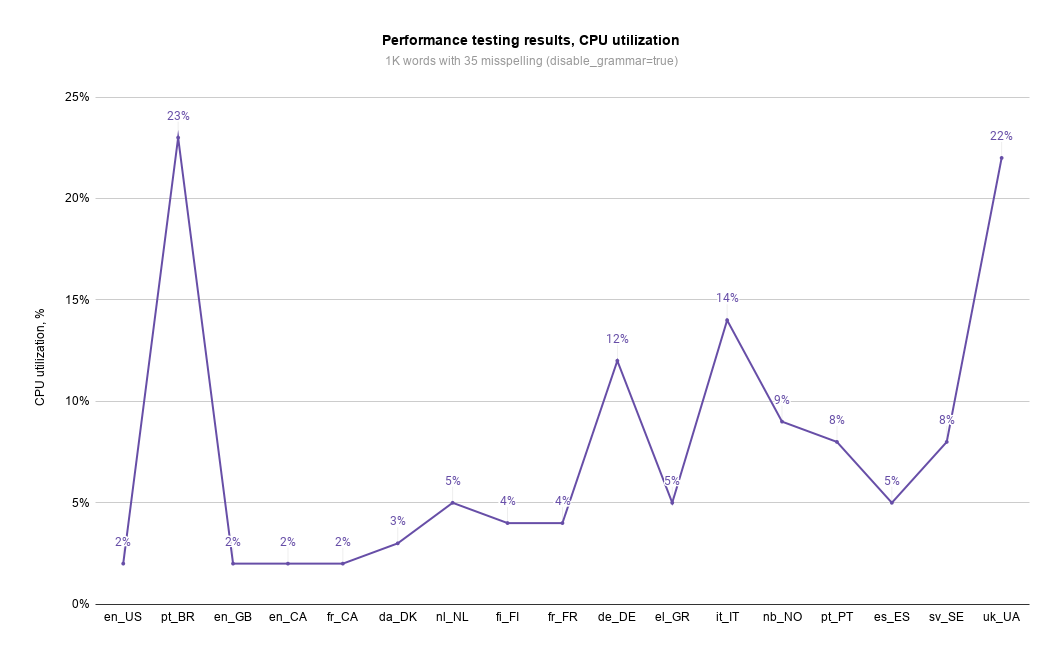

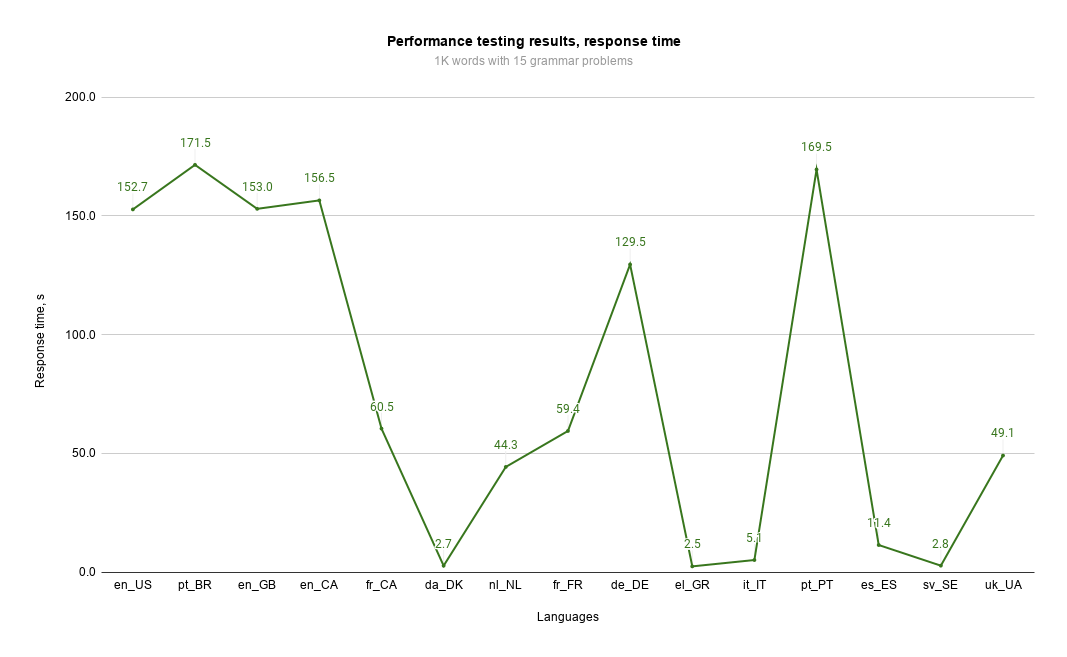

Our observations are presented in the charts below.

Chart below represents response time results aggregated by language when there are only 35 misspellings in text of 1K words size.

Chart below represents CPU utilization results aggregated by language and user tiers when there are only 35 misspellings in text of 1K words size.

Chart below represents response time results aggregated by language when there are only 15 grammar problems in text of 1K words size.

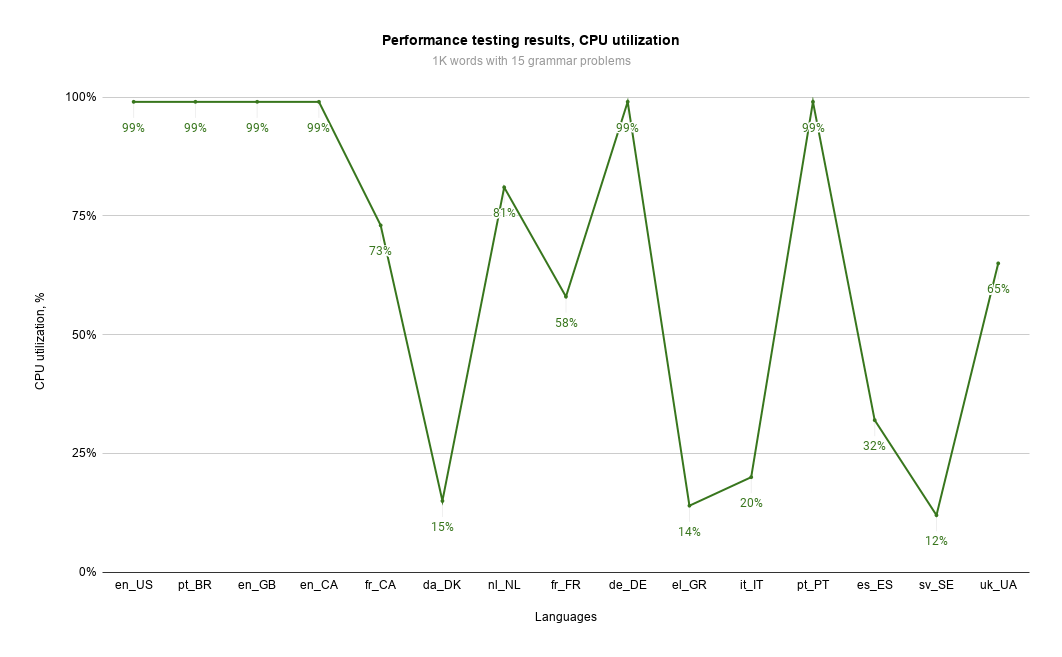

Chart below represents CPU utilization results aggregated by language when there are only 15 grammar problems in text of 1K words size.

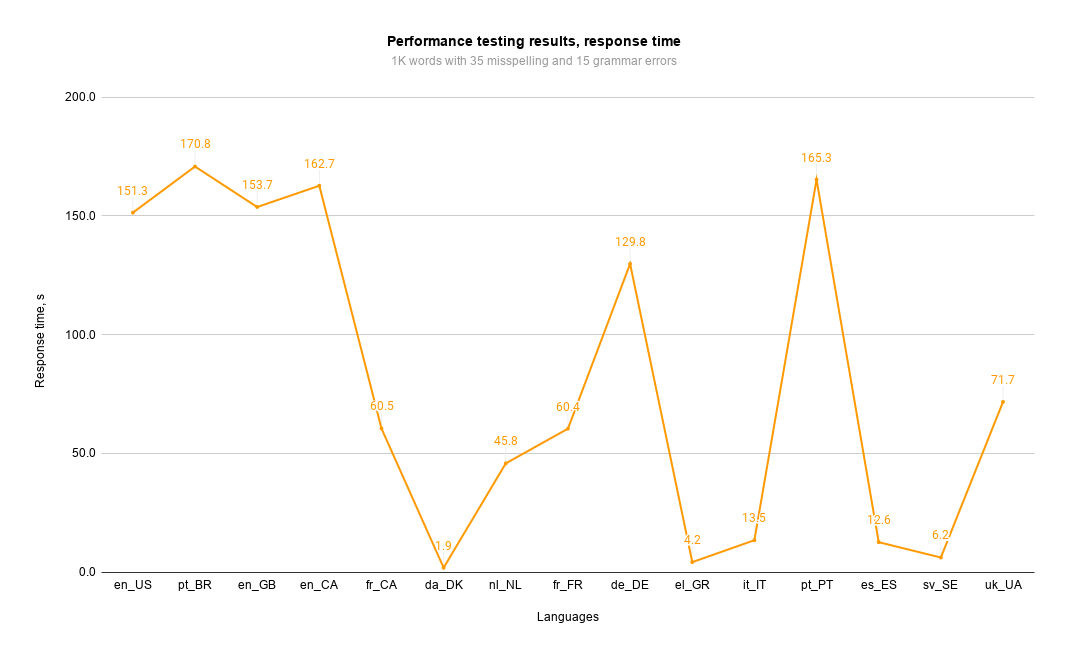

Chart below represents response time results aggregated by language and user tiers when there are 35 misspellings and 15 grammar problems in text of 1K words size.

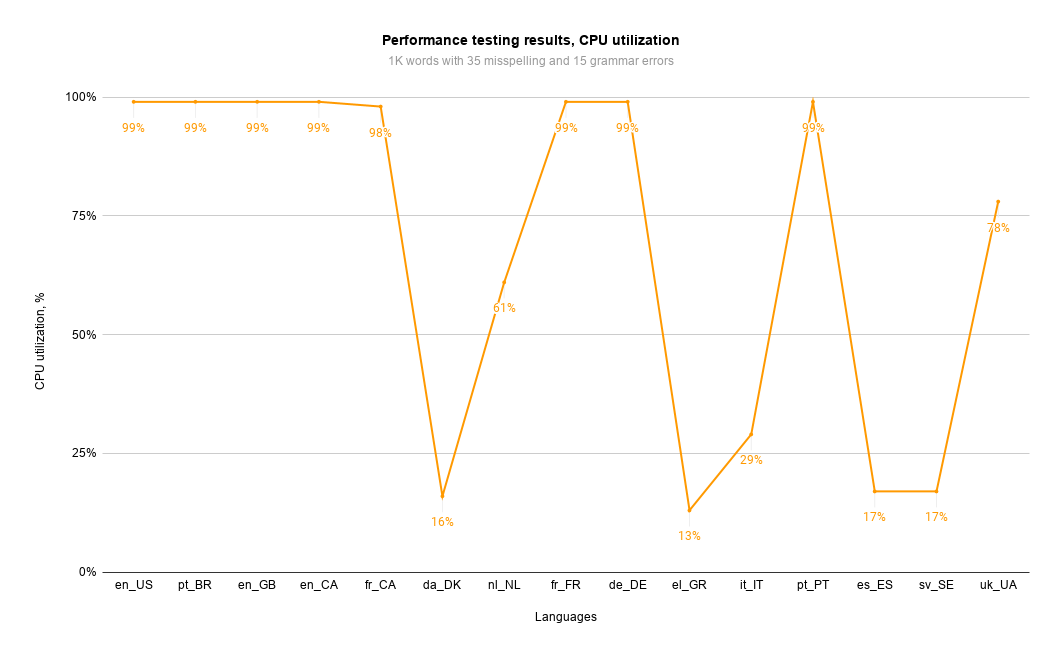

Chart below represents CPU utilization results aggregated by language and user tiers when there are 35 misspellings and 15 grammar problems in text of 1K words size.

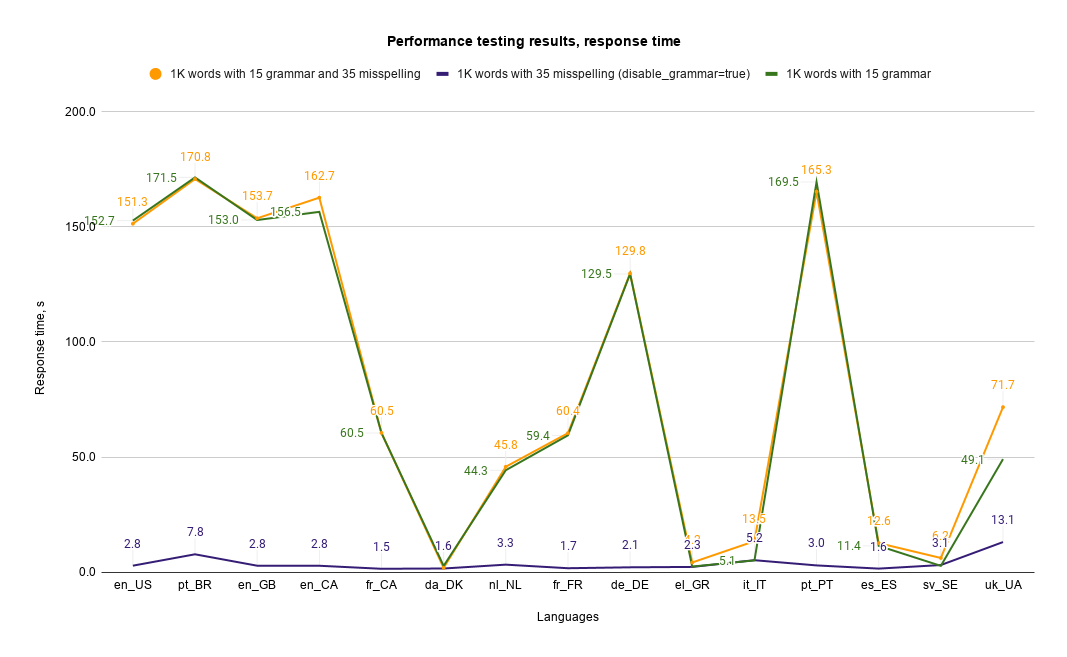

Chart below represents response time results aggregated by language and user tiers when there are 35 misspellings or/and 15 grammar problems in text of 1K words size.

Here are the outcomes and aftermath as well as our advice on hardware and software requirements and notes on performance issues which users may encounter:

During testing we used API requests which contain 1K words per request where 3.5% of words are spelling errors and 1.5% are for grammar errors. On average a person writing on the second language makes around 4%-5% of errors in total. Note, a mechanism of requests distribution and size of each requests for UI-based product are being optimized greatly to ensure smooth experience for users and decrease the load on the servers. |

If you have any questions or comments regarding the outlined results, please fill free to contact us.